Run the playground against an OpenAI-compliant model provider/proxy

The LangSmith playground allows you to use any model that is compliant with the OpenAI API.

Deploy an OpenAI-compliant model

Many providers offer OpenAI-compliant models or proxy services that wrap existing models with an OpenAI-compatible API. Some popular options include:

These tools allow you to deploy models with an API endpoint that follows the OpenAI specification. For implementation details, refer to the OpenAI API documentation.

Use the model in the LangSmith Playground

Once you have deployed a model server, you can use it in the LangSmith Playground.

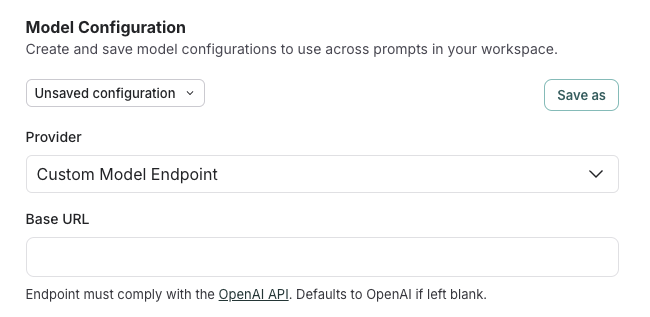

Configure the playground

- Open the LangSmith Playground

- Change the provider to

Custom Model Endpoint - Enter your model's endpoint URL in the

Base URLfield - Configure any additional parameters like API keys if required

The playground uses ChatOpenAI from langchain-openai under the hood, automatically configuring it with your custom endpoint as the base_url.

Testing the connection

Click Start to test the connection. If properly configured, you should see your model's responses appear in the playground. You can then experiment with different prompts and parameters.

Save your model configuration

To reuse your custom model configuration in future sessions, learn how to save and manage your settings here.